New Home Server

Much like my other recent article about my workstation upgrade, I decided to give my home server a big performance boost. This machine has went through many hardware iterations. Here are just the CPUs that I can think of: Athlon X64, Xeon X3000 series, Celeron 847, i3, and most recently, a Haswell i5-4590. I have also experimented and learned many different storage technologies due tothis journey. It began with mdadm+LVM, then onto ZFS in Solaris -> Openindiana -> OmniOS -> Linux, and now, I’m back to using Ubuntu 18.04 with SnapRAID + mergerfs for my bulk media and ZFS for my boot pool and Docker containers. This has proven to be a very flexible solution for my home server media setup.

All of this is re-purposed gear or used gear. This setup works great and is a huge performance improvement over my old setup. Adding all this CPU horsepower consequently impacted the total power draw of the system over a single CPU system with new architecture. At idle, the power draw went up about 65 watts over the i5-4590 system with 1 HBA + SAS Expander. At full load, that difference is a whole lot bigger 🙂

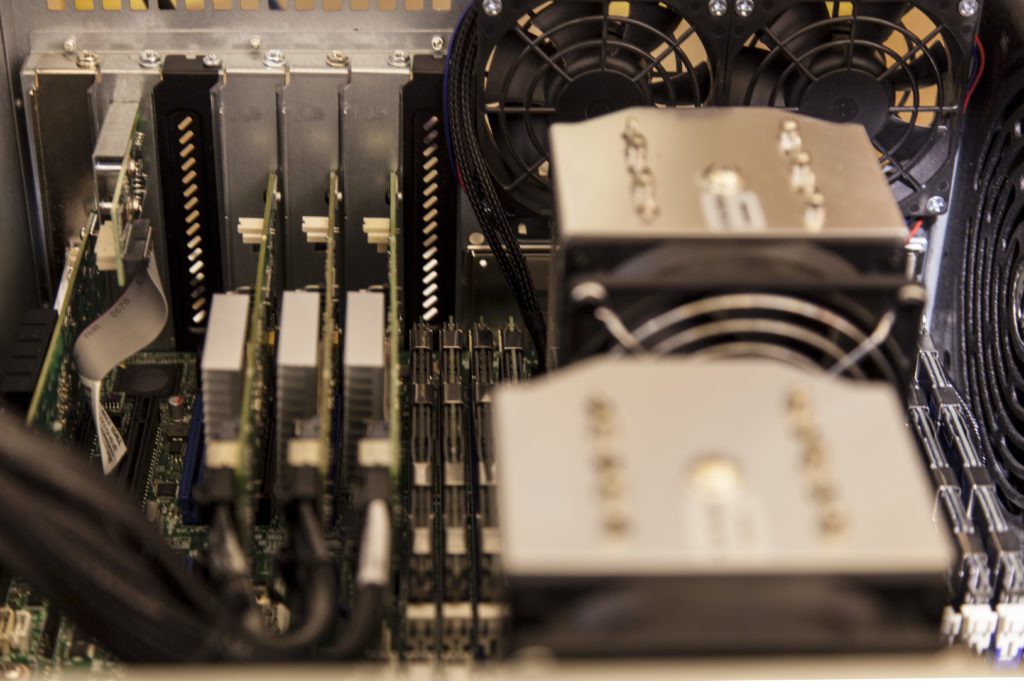

Home Server Parts List

CASE: Norco 4224

PSU: EVGA Supernova 750 G2

MOBO: Intel S2600CP

CPU: 2x Intel Xeon e5-2670 v1 SR0KX (16 physical cores and 32 threads total)

RAM: 16x Hynix 8GB DDR3 ECC RAM (128GB total)

CPU Heatsinks: 2 x Supermicro SNK-P0050AP4

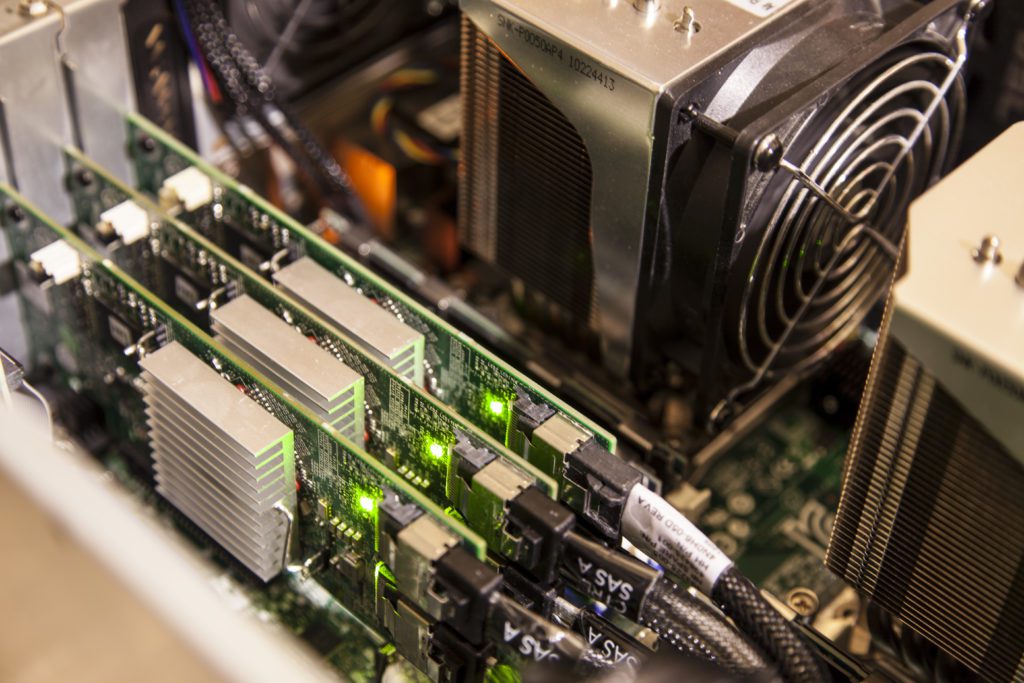

OS HD: (2) Intel S3700 400GB in ZFS mirror (an Intel 730 is shown in the pictures below).

HDs: (8)HGST He8 8TB, (8)WD Red 6TB, and (8)HGST NAS 4TB. Using SnapRAID with triple parity + mergerfs for 128TB usable

HBAS: 3x Dell H310’s flashed to the latest P20 IT firmware

ADDON: Mellanox X-2 Connect 10GBe over fiber to my workstation upstairs

ADDON: Intel AXXRMM4(+Lite) Modules for iKVM

This server hosts my files via SnapRAID + mergerfs, and employs Docker containers for a number of things including: Crashplan, Plex, Plexpy, Unifi, etc.

Hi Zack,

A question why you use 3x Perc H310’s into your build? because one H310 supports 16 disks.

Very thanks for your explanation.

My bad it is a 8 ports card 🙂 i need a coffer 3 x 8 = 24 good morning 😛

No problem. I’m glad you got it figured out 🙂

Hi Zack,

1) I currently have 6 TB of data: family photos/videos, my Blueray rips, docs. I have no backup solution at the moment except disconnected USB drives that sum up 6 TB. Yes i know.. its time for a REAL backup solution.

2) Proposing to buy one CPU (xeon class) and 5 drives (8 TB each for future data), can I tell unraid: “please use 2 of these drives for parity and the other 3 for data. If the 3 disks are exclusively for data, isn’t retrieval going to be really slow (read access)? what about write access times? The 1 gig NIC is not the issue here since you can only go as fast as your slowest component: your HD (7200 rpm and 240 mb/s max random reads). So what do you suggest I do to speed up my 3 drives so I can access/write data? Include a RAID card and do a RAID ‘?’ on those 3 drives OR buy one more drive and do a RAID 10?

2) I would also like to use the above for Virtualization labs, and containers (so do I need more space? or RAM or both?) I am planning to procure 64 gigs RAM ECC. Do I need SAS drives as opposed to SATA drives?

3) BTW, the monstrosity server you built is err… impressive and $$$.

Any input you can suggest would be greatly appreciated as you already setup a template for me to build but I am unsure about the build for my budget.

Hello 🙂

1. First, congrats on the choice to backup your data. Luckily 6TB isn’t that much to backup, but you will want to consider how important each part is. For example, I have local versioned backups of my family photos/videos and documents. I also, back all of these files up to Backblaze B2 as well. That way, even if I have a flood or fire, I can still restore all of those photos.

2. I typically use SnapRAID, not UnRAID, but both of these systems rely on the same basic concept. UnRAID costs money, but it does provide realtime parity vs. SnapRAID’s snapshot parity. UnRAID can also use a SSD/NVMe drive(s) as cache drives for writes to speed up access.

With modern disks, your NIC will likely be the bottleneck on both reads and writes if you are going over the network. Almost all modern 8TB disks read and write WAY over 100 MB/s, which is what your gigabit NIC will limit you to (roughly). So, if gigabit speeds over the network is okay, you will be just fine with UnRAID or SnapRAID. And, yes, you do just configure the software to use two disks for parity and three for data. That would be a very safe configuration. That being said, I store my types of data in different storage arrays. I use SnapRAID for all bulk media (tv, movies, etc). I use ZFS raidz2 on top of SAS SSDs for all of my family media.

3. What do you plan to virtualize/containerize? That will really drive your space and RAM needs. But, I can comfortably say you should be just fine with 64GB of RAM. And, you don’t need to buy SAS disks over SATA, unless you can find a good deal on used enterprise SAS disks (these are pretty much all I have bought lately).

3. Thanks! This server is expensive, but really not that crazy. I have bought everything used (other than the PSU and case) and it really does keep the costs down.

If you could provide more info on what things you plan to virtualize, I’d be happy to give you a sample build. It would be nice to know if you have any parts you plan to use already (case, PSU, etc), and if you have a range for a budget (without the cost of hard drives).

1. Subscribed to Backblaze B2. Thanks!

2. I was not sure about unraid 100% but now that I read about Snapraid I will go with this option (even a gui is available). To be honest, I am a speed monster and I do not like slowness over my OWN network at home. So would I need to procure a NIC that supports SFP’s (overkill or)? What switch do you recommend for the future to compensate for the bigger pipe? 8 port switch is fine in my case. This bit: “. I use ZFS raidz2 on top of SAS SSDs for all of my family media” Workstation is a new build (still building it) and the server will also be a new build. Like you stated, 6 TB is not too much data but my wife forgot to mention she got a bunch of disks as well for backup = another ~2 TB in addition to the gargantuan online photo collection (this woman loves to take photos). Might have to go with a bigger HD (10 or 12 TB). d) Virtualize a couple of Linux distros to play around with.

Knowing now that the bottleneck are NOT the HD’s but the NIC like you stated, a Xeon class server would suffice with 64 gigs ECC RAM. I do not know if I need the newer xeons or not for what I am trying to accomplish but internal network speed is a must! I do not have any spare hard drives bigger than 1 TB (have very old hd’s that have accumulated dust: WD 250 gig). So ..what I am thinking is most probably SATA 12 TB disks. I have a spare case (EATX with 8 HD’s space) and 1000 Watt PSU but no mobo, super-fast NIC, ram, cpu, cpu cooler.Budget: No more than $4k for the server (no HD’s). The reason behind this is longevity.. I do not want to pinch pennies on server hardware (first build)

One thing to note: I do not have space for a rackmount solution, hence I will be using my olde EATX case for the server.

Thanks Zack! 🙂

I think something is wrong as my text is being cutoff.

Here it is again:

2. This bit: ‘I use ZFS raidz2 on top of SAS SSDs for all of my family media’ Workstation is a new build (still building it)…etc